‘We’re all going to die’

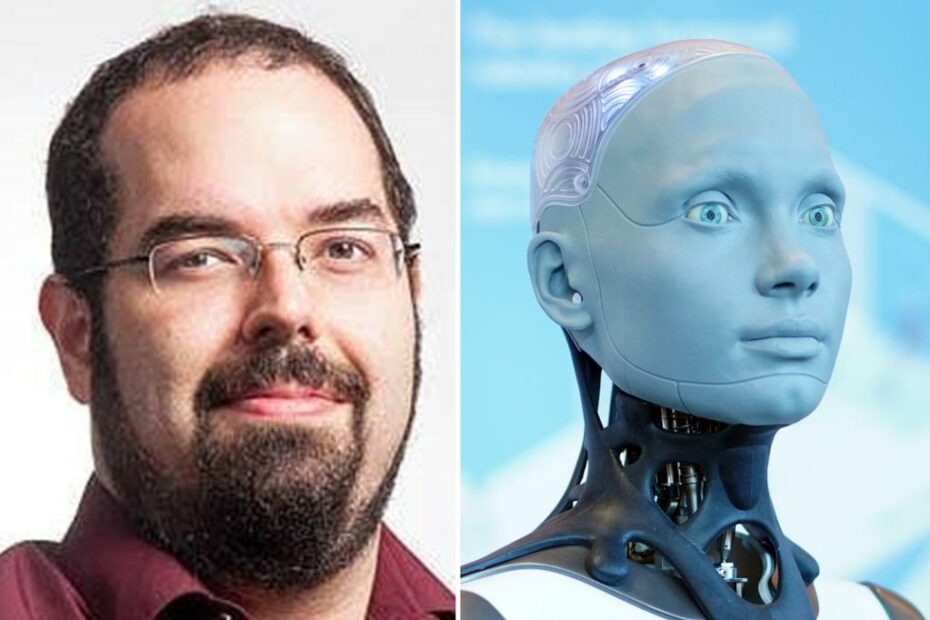

Prominent Silicon Valley researcher, Eliezer Yudkowsky, has raised concerns about the rapid advancement of artificial intelligence (AI), warning that the consequences for humans could be dire. Yudkowsky, who is regarded as extreme by his tech peers, echoed the worries expressed by figures such as Elon Musk, who advocated for a six-month pause on AI research. In an interview with Bloomberg News, Yudkowsky stated his belief that humanity is not ready and lacks understanding of AI, potentially leading to catastrophic outcomes.

Yudkowsky, a research fellow at the Machine Intelligence Research Institute, emphasized the significance of the current state of AI and its trajectory. He expressed concern that data analysts do not fully comprehend the intricacies of the latest version of GPT, an AI-powered language model developed by OpenAI. Yudkowsky admitted that AI research has progressed further than he had anticipated, stating, “I think it gets smarter than us.”

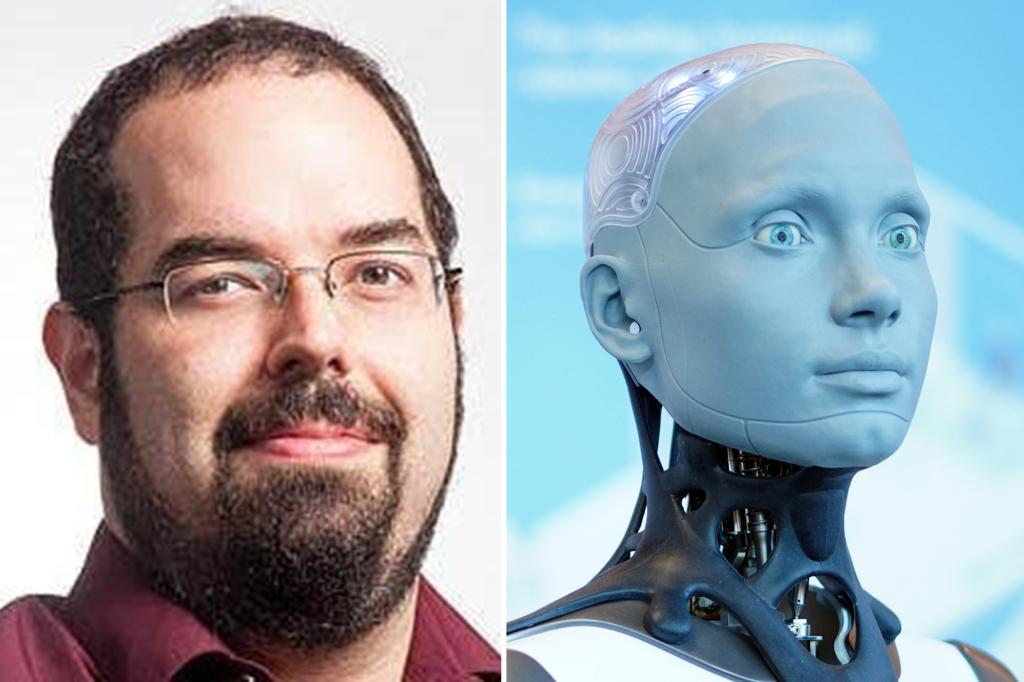

The researcher emphasized the disparity between the pace at which AI is acquiring knowledge and humans’ ability to comprehend it. “Capabilities — how smart these things are — has been running way ahead of progress in understanding how they work and being able to shape their behavior in detail,” Yudkowsky explained. He voiced frustration that there is still a lack of understanding regarding the inner workings of AI models such as GPT-4, calling for substantial investment in research to decipher their complexities.

Despite acknowledging the need for more scientists to work on understanding AI, Yudkowsky expressed doubt that even with increased focus, significant progress could be made within the next few years. His concerns align with those of Musk and other tech figures, who share anxieties about AI systems surpassing human intelligence and potentially spiraling out of control.

These concerns have prompted nations worldwide to scramble for regulatory measures to address the risks associated with AI. The European Union is at the forefront, with the expected approval of its AI Act later this year. Yudkowsky reiterated his belief that AI-powered machines will eventually surpass human intelligence, reinforcing the urgency to gain a better understanding of their inner workings.

Critics of AI often argue that the existential risks highlighted by AI makers contribute to the hyperbole surrounding the capabilities of the technology. They argue that more immediate regulations should focus on addressing real-world problems rather than speculative future scenarios. In line with these concerns, a new Netflix documentary sheds light on the ongoing arms race among world governments to develop AI-controlled weaponry.

FAQs:

Q: Who is Eliezer Yudkowsky?

A: Eliezer Yudkowsky is a prominent Silicon Valley researcher and AI theorist who has raised concerns about the rapid advancement of artificial intelligence.

Q: What are Yudkowsky’s concerns about AI?

A: Yudkowsky, along with other tech figures such as Elon Musk, warns that humans are not fully prepared for the consequences of AI advancement. He fears that AI could surpass human intelligence, leading to potential catastrophic outcomes.

Q: What is GPT?

A: GPT, or Generative Pre-trained Transformer, is an AI-powered language model developed by OpenAI. Yudkowsky expresses concern that the latest version, GPT-4, is not fully understood by data analysts.

Q: What are the concerns surrounding AI development?

A: Worries include AI systems outsmarting humans, potential misuse, and a lack of comprehensive understanding of AI models. Governments are racing to implement regulations to address the risks and challenges associated with AI technology.

Q: What is the EU AI Act?

A: The EU AI Act is a regulatory framework proposed by the European Union to govern the development and deployment of artificial intelligence. Its approval is expected later this year.

Q: Why is there criticism of AI makers regarding their warnings?

A: Critics argue that warnings about existential risks posed by AI may overshadow the need for immediate regulations to address real-world issues. They call for a balanced approach to AI development and regulation.

Q: What does the Netflix documentary reveal about AI?

A: The documentary highlights an ongoing arms race among world governments to develop AI-controlled weaponry, emphasizing the potential risks and challenges associated with AI advancement.

‘We’re all destined for mortality’

Prominent Silicon Valley researcher, Eliezer Yudkowsky, has raised concerns about the rapid advancement of artificial intelligence, warning that the consequences for humanity could be dire. Yudkowsky, an AI theorist known for his extreme views, spoke to Bloomberg News, echoing the worries expressed by other tech figures such as Elon Musk, who have called for a six-month pause on AI research. Musk had previously stated that there is a “non-zero chance” that AI could pose a threat to humanity by turning into a “Terminator.”

Yudkowsky, a research fellow at the Machine Intelligence Research Institute, emphasized the urgency of the situation, stating that the current state of AI development is exciting but that the future implications are much more significant. He specifically expressed concerns about the understanding of data analysts regarding the latest version of GPT, an AI-powered language model created by OpenAI. Yudkowsky admitted that GPT has progressed further than he expected and mentioned his essay in Time, where he advocated for airstrikes against “rogue” data centers.

According to Yudkowsky, AI is acquiring knowledge at a faster pace than humans can comprehend. He explained that the capabilities of AI systems are outpacing progress in understanding their inner workings and controlling their behavior. He raised concerns about the lack of understanding regarding the latest edition of GPT, stating that “We have no idea what’s going on inside GPT-4.” Yudkowsky urged for increased funding to study AI, stating that if humanity were sensible, it would invest $100 billion annually to unravel the mysteries of AI development.

Yudkowsky’s concerns align with those of Elon Musk and other tech figures who advocate for a pause in AI research. The fear of AI systems surpassing human intelligence and becoming uncontrollable has led to calls for regulations and precautions. The rise of highly capable AI chatbots, such as ChatGPT, has exacerbated worries, prompting countries worldwide to develop regulations for AI technology. The European Union is at the forefront of this effort, with its AI Act expected to be approved later this year.

Critics, however, argue that dire warnings about the existential risks of AI may exaggerate the capabilities of these systems and deflect attention from more immediate issues that require regulation. A new Netflix documentary recently revealed that governments globally are engaged in an arms race to develop AI-controlled weaponry.

As concerns grow, it is imperative to stay focused on this topic and delve deeper into the implications and potential consequences of artificial intelligence.